Hello Hey folks! Thanks for joining me in my first render of Offscreen canvas. I'm Daniel and this is my place to discover and share things I love about WebGL, real-time rendering, and creative coding.

If you have a topic you think I should cover, feel free to reply to this email or reach out on Twitter!

Particle systems like the ones in this week's demo are mainly composed of two techniques: Geometry Instancing and GPGPU.

Geometry Instancing is a technique where we send the GPU geometry data once and tell it to draw that geometry 10,000 times.

GPGPU is the idea that we can also use the GPU for more than just graphics. In our case, we use the GPU to calculate the position of all those particles.

Getting started

- Rendering 100k spheres with instancing

- Instancing with ThreeJS

- FBO Particles from scratch

- Akella creating particles with Three's GPUComputationalRenderer

Particle angle

This simulation has thin rectangles instead of spheres. To make them look where they are moving, spite uses their velocity (change in position) to calculate a rotation matrix.

Also, if you place the mouse in the center, you'll notice they are almost going around a sphere. This is done by not allowing the particle's position to have a length less then 1.

Particles around A model

Brute is a simulation determined by the weather conditions of the wine.

To position its particles it reads the bottle vertices to use them as the initial position for the particles. After a while, the particles die off, and they are reborn in their initial position.

Lastly, it renders the bottle model the same color as the background to hide the particles behind it and help the particles create a better outline.

Music and simulations

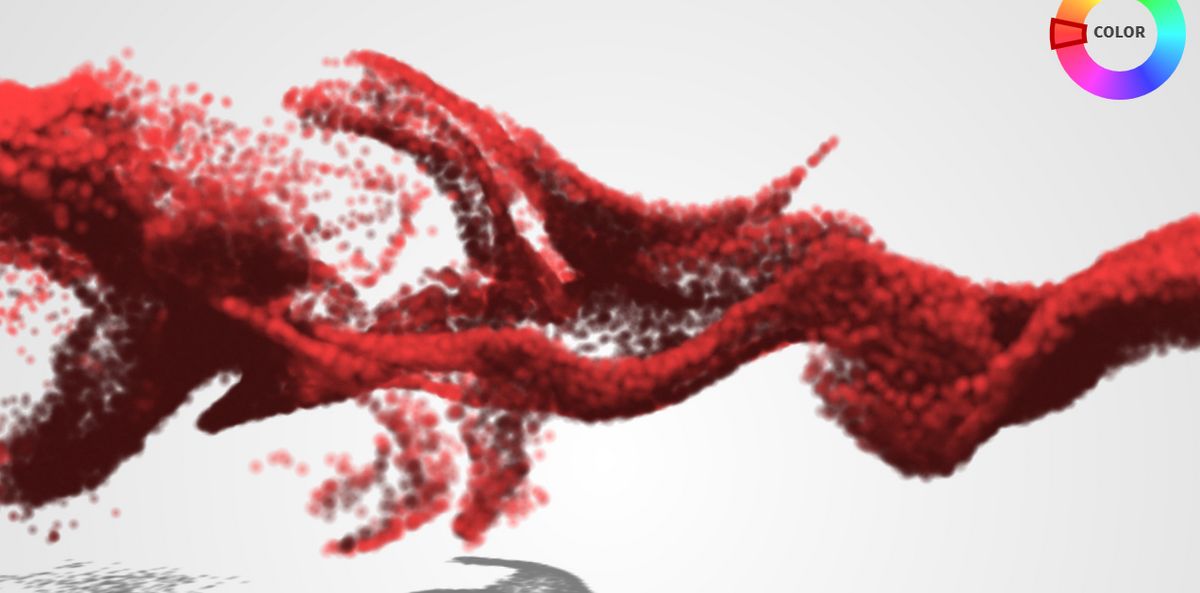

Synthesia feels like it has infinite combinations. Yet, the simulation is only controlled with 6 parameters: Spin Force, offset time, attractor, color change, explosion force, and speed.

The change in music and creative combination of a few parameters give the project so much depth and playability.

Half-angle slice SORTING

Here David Li does an advanced technique called half-angle slice sorting to create volumetric particle shadowing.

Here's a visualization of the sorting that's happening

The base of this technique is that particles in the half-angle of the view and light direction are visible to both the camera and the light source.

If we sort them based on perpendicular slices of the half-angle, we can accumulate shadowing to the shadow buffer and render the scene to the screen at the same time.</p>

Particle color blending

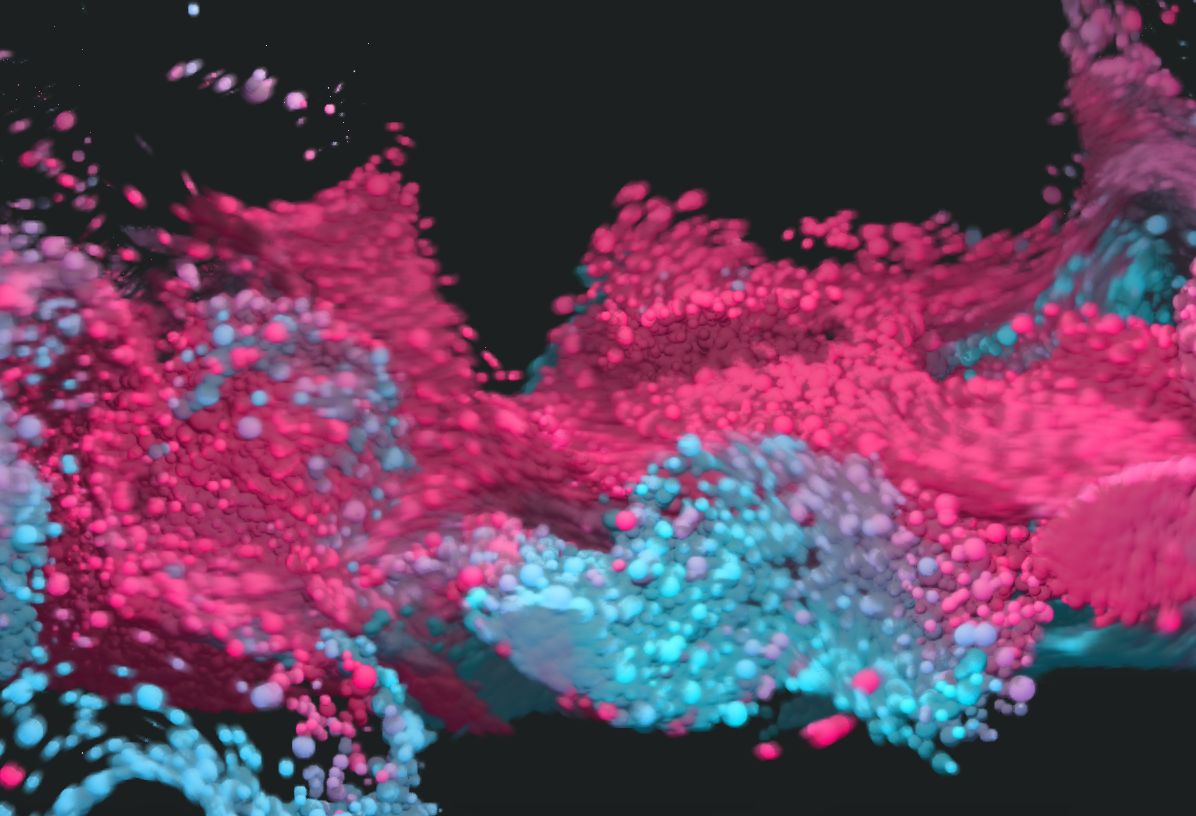

Hypermix has a lot of complicated things going on, but we are only going to focus on the blend of colors. This is made with multiple renders:

First, Edan renders some data to a texture (GPGPU): depth, distance to the sphere's center, and life. Then, the "additive texture" of color is blurred to create that slight blob between the spheres.

Second, he renders the scene with just the shadows to a texture.

And finally, he renders the regular scene using the additive texture data to calculate the blended color, and the shadow texture to give shape to the sphere.